Countering AI Deception: Defending Against Phishing, Voice Cloning and Deepfake Attacks

Artificial intelligence is transforming cyber threats. Attackers now use generative models to craft convincing emails, clone voices and produce synthetic videos that fool traditional defenses. For security professionals already familiar with phishing and social engineering, the challenge is to understand the technical innovations behind these attacks and to adapt defenses accordingly. This article dives deeper into the mechanics of AI powered phishing, vishing and deepfakes and offers practical guidance for building a resilient defense.

The Evolution of AI Threats

Early phishing campaigns were scattershot; they relied on poor grammar and generic messages to trick unsuspecting users. Today’s adversaries leverage generative AI and large language models to produce polished, context aware communications. They scrape social media and corporate press releases to personalize messages and mimic internal tone. At the same time, generative adversarial networks and other voice synthesis techniques allow attackers to clone executive voices or create entire meetings of synthetic colleagues. Video deepfakes combine facial mapping and neural rendering to fabricate realistic video conferences where every participant except the victim is a synthetic replica.

These tools reduce the barrier to entry for cybercrime. Open source models like Stable Diffusion and publicly available speech synthesis libraries enable attackers to create believable audio and video with modest hardware. Malicious chatbots such as WormGPT allow non technical criminals to generate phishing scripts, while data brokers sell profiles that feed hyper specific targeting. The result is an ecosystem where social engineering is more automated, scalable and effective than ever before.

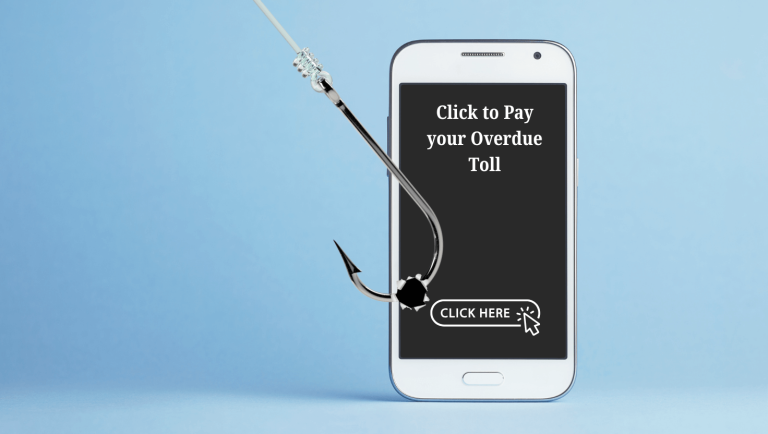

Advanced Phishing and AI Driven Natural Language Generation

Modern phishing emails no longer rely on typos or urgency alone. They use AI to analyze prior communications and mirror the writing style of a colleague or vendor. Threat actors collect Open Source Intelligence to craft context rich content such as referencing a recent project or quoting a real invoice number. They integrate browser fingerprinting and behavioral analytics to trigger phishing only when the target’s usual device is online, making detection harder.

Technical defenses must evolve. Domain based Message Authentication, Reporting and Conformance (DMARC), Sender Policy Framework and DomainKeys Identified Mail remain essential for validating sender authenticity, but they are not enough. Security teams should deploy machine learning filters trained on semantic analysis to identify language anomalies and unusual sentiment. Natural language understanding models can flag messages with subtle contextual inconsistencies that humans might miss. Additionally, user training must evolve beyond generic phishing simulations. Scenarios should include AI generated messages that accurately mimic internal style and incorporate business context.

Voice Cloning and AI Crafted Vishing

Voice synthesis technologies have advanced rapidly. Neural models can generate speech in another person’s voice by analyzing a few seconds of audio. Attackers use these models to initiate phone calls that sound like a trusted executive requesting a wire transfer or an IT administrator asking for login credentials. They also automate interactive voice response systems that collect sensitive data through seemingly legitimate prompts.

Countermeasures must address both technical detection and procedural controls. Voice biometric systems should include liveness detection to ensure that the speaker is present and not a recording. This can involve randomized prompts, background noise analysis and timing variations that are difficult for a synthetic voice to reproduce. Critical requests delivered by voice should require multi channel verification such as a separate confirmation through a secure messaging platform. Establishing call back procedures and unique pass phrases for high value transactions helps ensure that a vishing call does not succeed solely on trust in a familiar voice.

Deepfakes and Video Impersonation

Deepfake technology uses generative models to manipulate or create video content where faces and voices are altered to impersonate real individuals. Attackers can stage video calls in which everyone on the call is synthetic except the victim. Deepfakes can also be used to spread disinformation or damage reputations.

Detecting video deepfakes requires specialized algorithms that analyze texture inconsistencies, eye blinking patterns and temporal artifacts. Security teams should evaluate tools that integrate into conference platforms to assess the authenticity of participants. In high stakes meetings, consider adopting dynamic verification methods such as randomized motion prompts where participants must perform actions that are difficult for a deepfake to reproduce. For recorded videos and uploaded content, digital watermarking and cryptographic signatures can provide provenance, allowing recipients to verify that media originated from a trusted source.

Building a Defense Strategy

Responding to AI driven social engineering requires an integrated approach that combines technology, processes and people. Key elements include:

- Identity Centric Security: Implement multiple factor authentication across all sensitive systems, with hardware based tokens or passkeys where possible. Adopt risk based authentication that adjusts controls based on behavioral analytics and device reputation.

- AI Enhanced Detection: Use machine learning models to analyze inbound communications across email, voice and chat channels. These models should assess linguistic features, acoustic signatures and behavioral context to detect anomalies.

- Segregation of Duties and Transaction Controls: For financial transactions and sensitive data changes, enforce workflows that require approval from multiple authorized individuals. Automatic alerts and holds on transactions above a threshold provide additional time for verification.

- Continuous Education: Regularly update awareness training to cover AI generated threats. Include examples of polished phishing emails, voice clones and deepfake videos. Encourage a culture where employees feel comfortable challenging unusual requests.

- Incident Response Preparation: Develop playbooks for responding to successful AI driven attacks. This includes rapid containment of compromised accounts, forensic analysis of synthetic media and communication plans to inform stakeholders without spreading panic.

Conclusion

AI is transforming both the offensive and defensive sides of cyber security. While attackers use generative models to automate deception, defenders can leverage machine learning to detect subtle anomalies and enforce adaptive controls. By understanding the technical foundations of AI powered phishing, vishing and deepfakes and by implementing layered defenses that combine identity centric security, advanced analytics and robust processes, organizations can stay ahead of these evolving threats and maintain trust in their communications.